Integrate with One API

This document describes how to integrate Monkeys’ OpenAI-compatible large language model interface into One API. For installation instructions of One API, please refer to its official documentation.

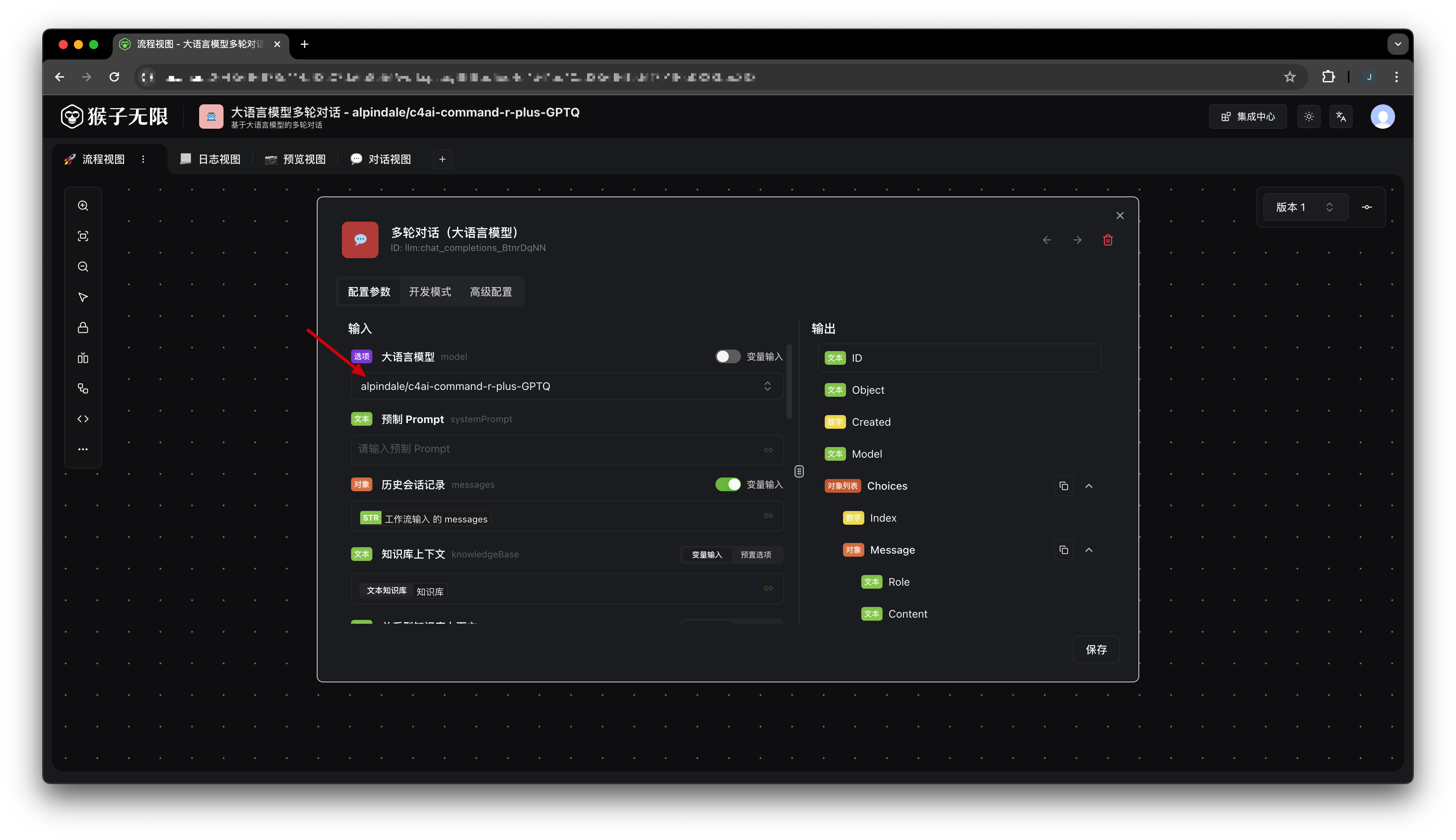

1. Create a large language model workflow in the Monkeys console

For detailed steps on creating a workflow, see Built-in Tools (Large Language Models).

There are a few points to note:

- Select the model you need to use. Here we choose

alpindale/c4ai-command-r-plus-GPTQ.

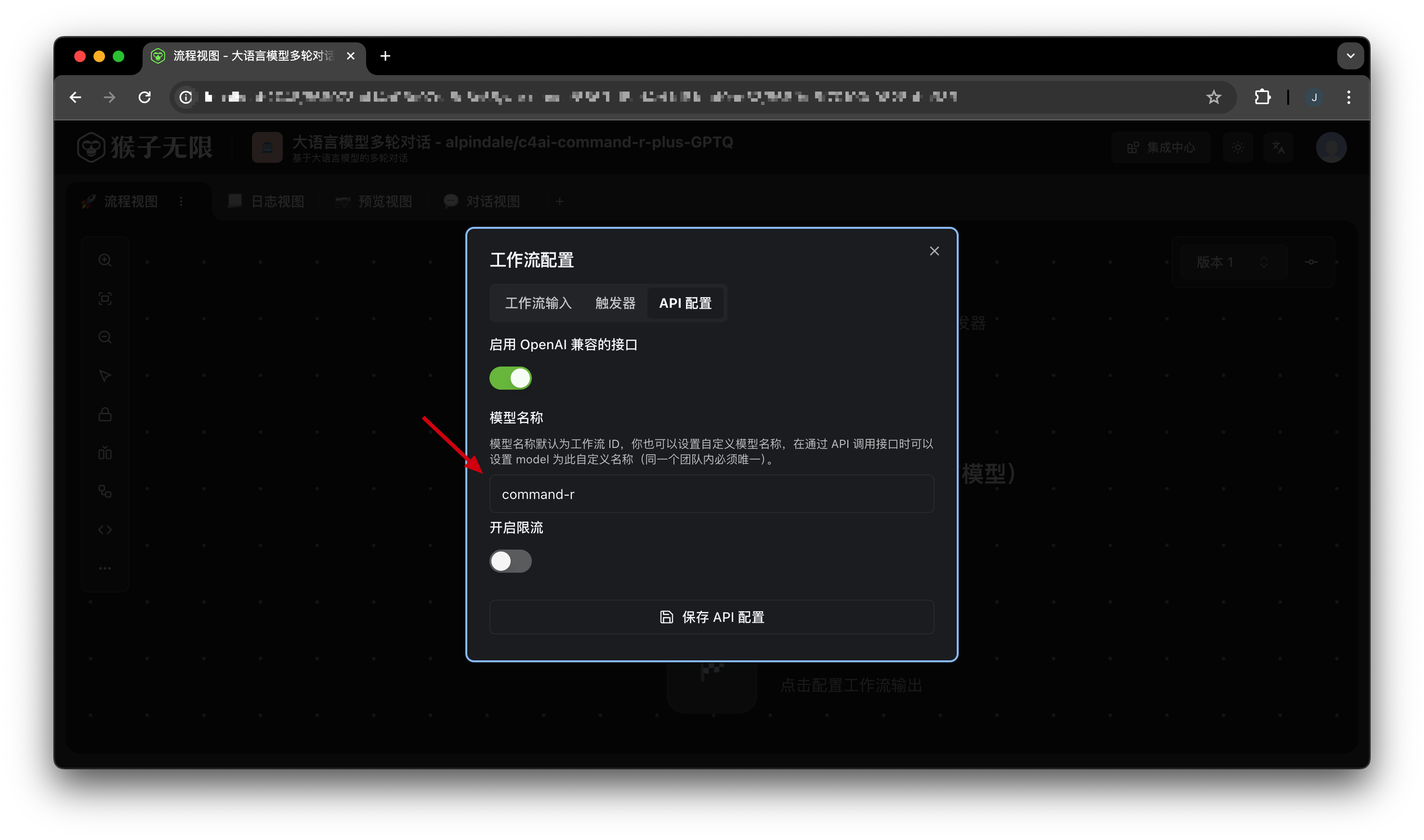

- In the API Settings of the Start node, set the Model Name to the desired model name. Here we set it to

command-r.

By default, the

modelparameter of Monkeys’ OpenAI interface corresponds to the workflow ID. You can modify this value to set the model name. (Model names must be unique within the same team)

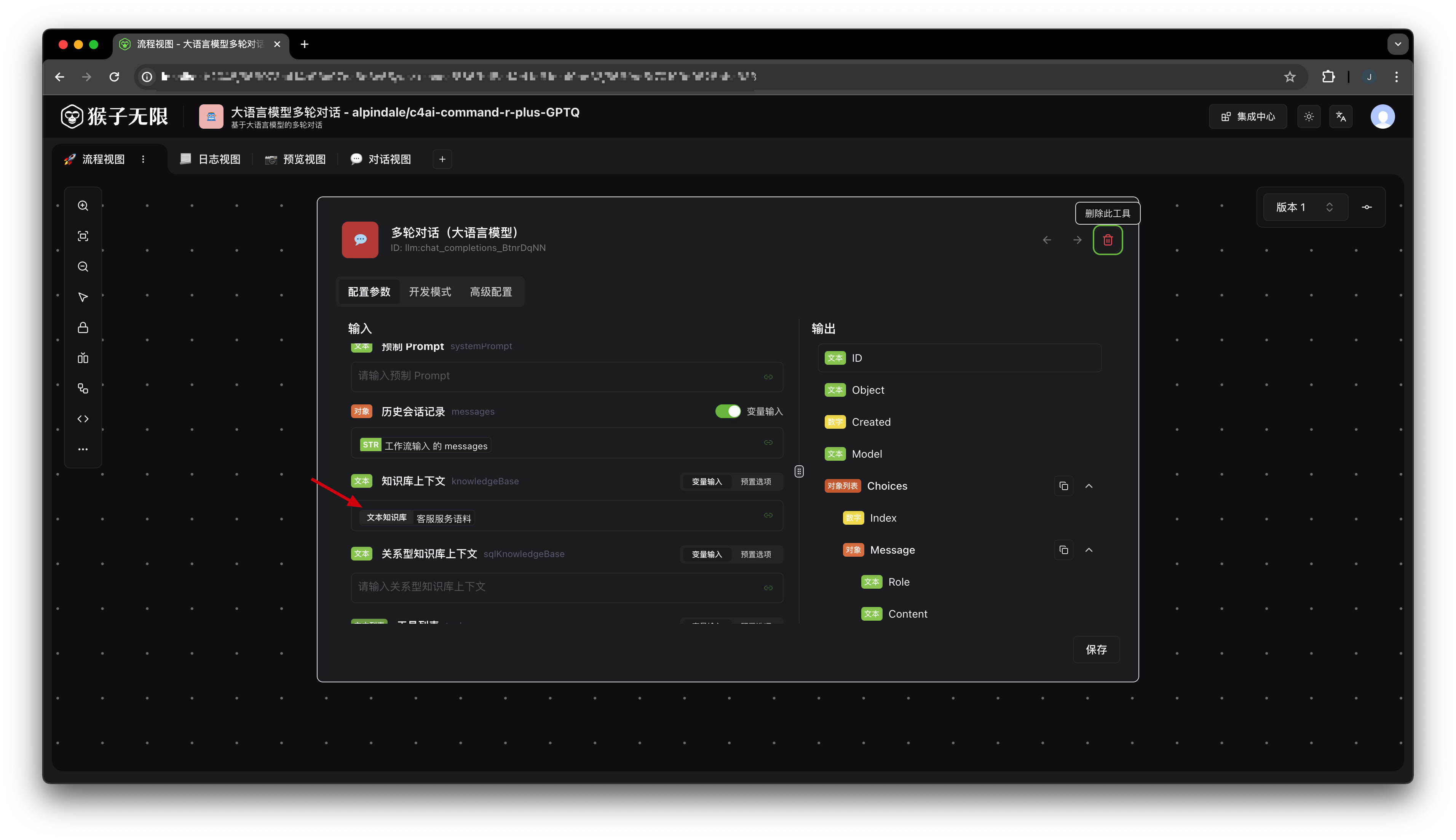

- (Optional) You can set a knowledge base context for this workflow, allowing the large model to automatically use the knowledge in the knowledge base to answer questions.

For details, see Built-in Tools (Private Data Search).

Here we add a customer service corpus:

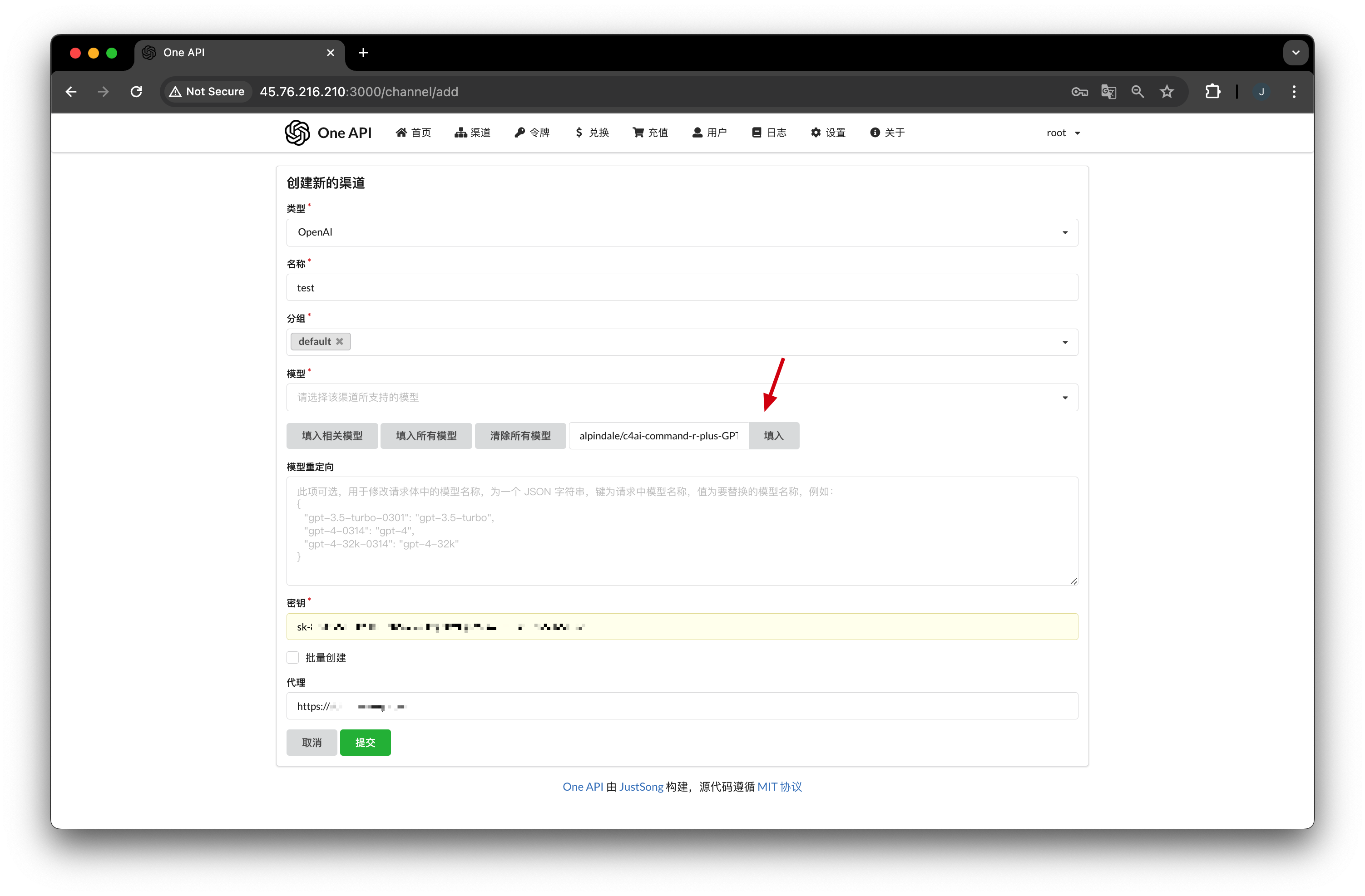

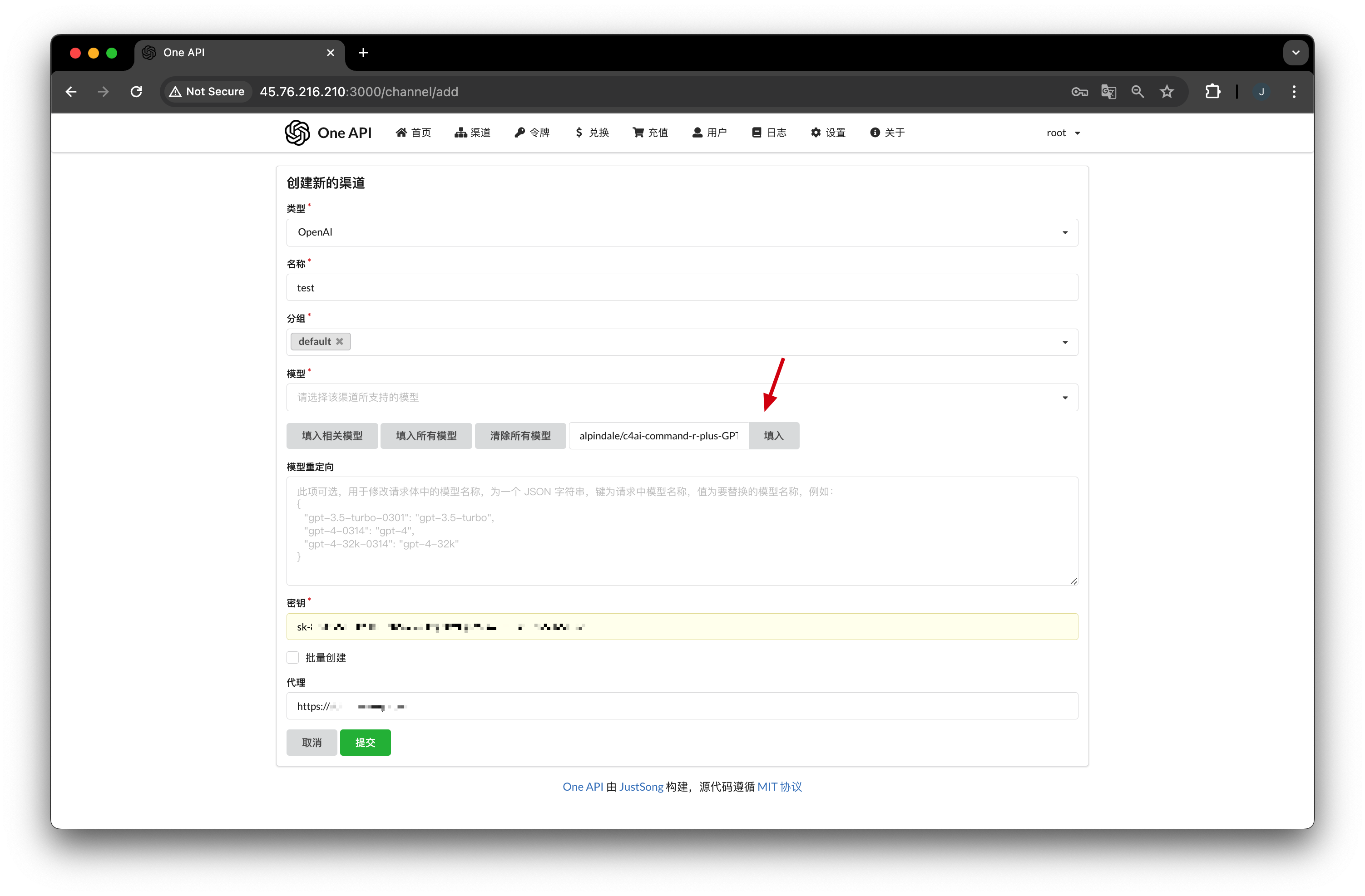

2. Create a channel

In the Channels page of One API, create a new channel and fill in the following information:

- Type: Select OpenAI.

- Name: Enter any name.

- Group: Enter any group.

- Model: Enter the custom model name we set above (see step 1).

- Key: Monkeys’ API Key, which can be created or obtained on the settings page.

- Proxy: Fill in the address of the Monkeys service, without the

/v1suffix, e.g.,https://ai.infmonkeys.com.

Click create.

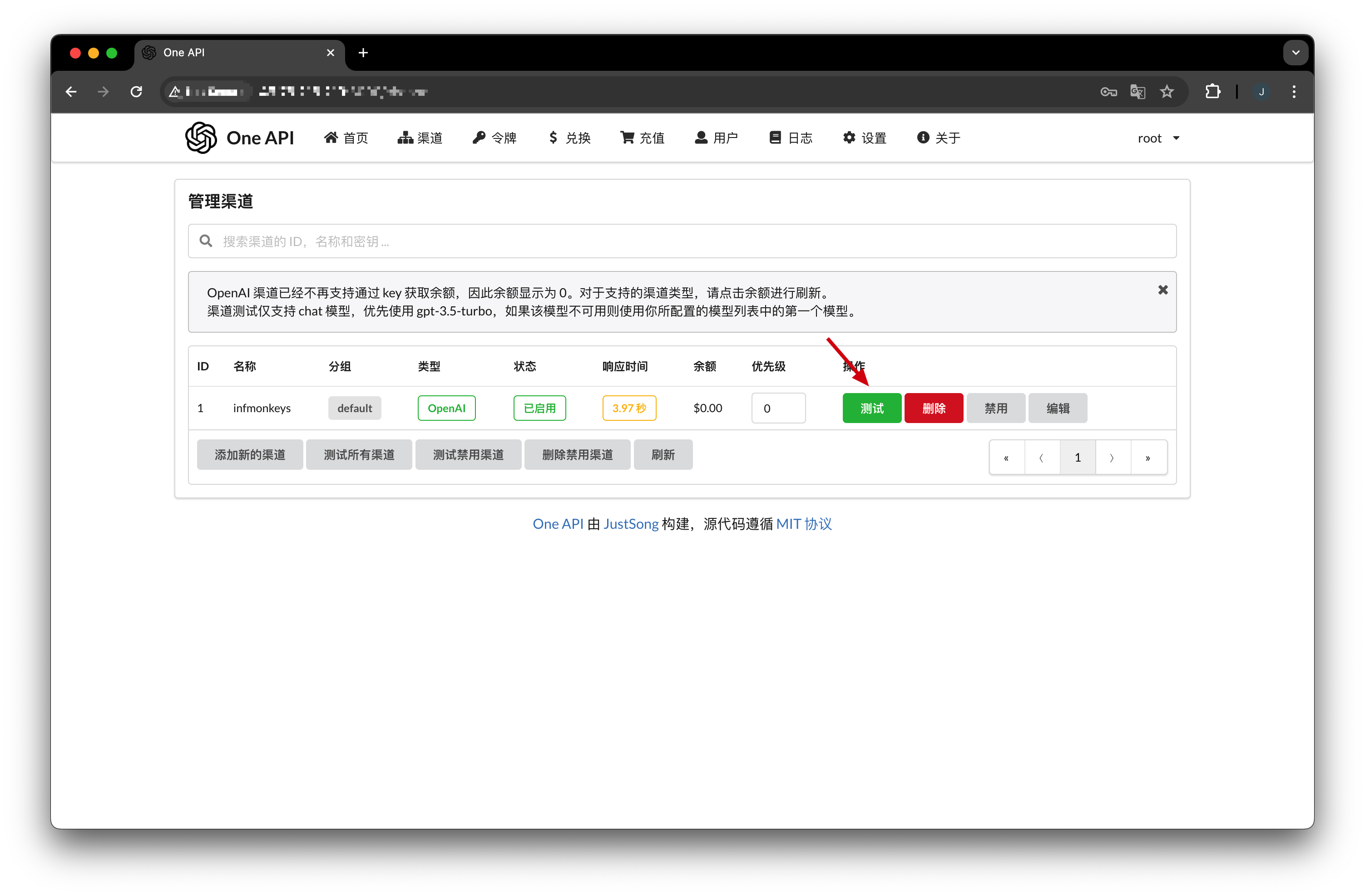

Test Channel Connectivity

Click the Test button to check if the connection is successful.

3. Create a token

In the Tokens page of One API, create a new token and fill in the following information:

- Name: Enter any name.

- Model Scope: Select the model we added above.

Click create.

4. Call the API

Modify the API address, token, and model to your actual address, token, and model.

curl --location 'https://your-one-api-service.com/v1/chat/completions' \--header 'Authorization: Bearer sk-xxxxxxxx' \--header 'Content-Type: application/json' \--data '{ "model": "alpindale/c4ai-command-r-plus-GPTQ", "messages": [ {"role": "user", "content": "Hello"}, {"role": "assistant", "content": "Hello, how can i help you today?"}, {"role": "user", "content": "What is today"} ], "stream": true}'